I needed a way to process a ton of information recently. I had a bunch of systems that I could use, each with wildly different levels of resources. I needed to find a way to distribute work to all of these CPU’s and gather results back without missing any data. The answer that I came up with was to create a processing pipeline using zeromq as a message broker. I abstracted the process into three parts: task distribution, processing, and collection.

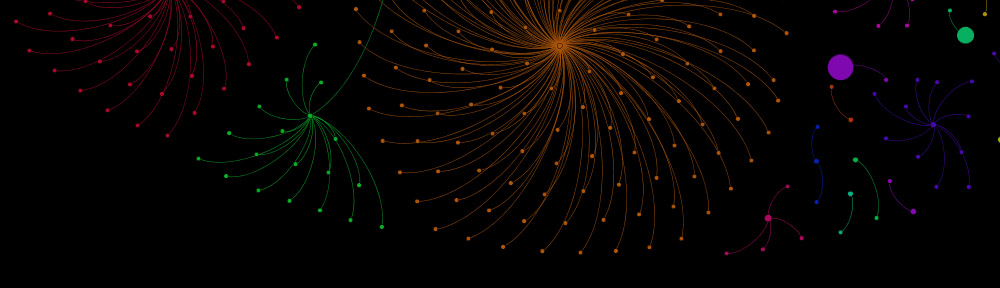

Since Python has a global interpreter lock, I needed to distribute my process using something other than threads. I turned to ZeroMQ since they have a robust message system. One of the ZeroMQ message patterns, the pipeline, was a great jumping off point for my needs. But the pipeline didn’t have any sort of tracking or flow control. I added a feedback loop to keep track of the total items sent and gap detection. The idea is that all work to be processed will have a sequential key. There are three pieces of the app, Start, Middle, and Finish. Start and Finish will use the sequential keys to understand where they are in the process and to replay any missing items.

There can be gotchas. If you don’t set the timing correctly on the apps, you might start replaying long processing jobs too quickly and create a process storm. But for the type of work I was doing it was fairly easy to set a reasonable set of times.